Modern search engines demand more than just quality content; they require extreme performance and technical precision. Implementing effective headless CMS SEO strategies allows you to break free from the bloat of traditional platforms, offering total control over how search bots interact with your data. By decoupling your backend from the frontend, you gain the agility to optimize Core Web Vitals and leverage structured data in ways a standard WordPress setup cannot match.

Speed is a critical ranking factor that determines visibility in an AI-driven search environment. Utilizing server-side rendering and edge caching ensures your site delivers a fast user experience with an LCP well under the 2.5-second threshold. This architectural shift addresses common JavaScript crawling hurdles while providing the flexibility to distribute optimized content across every digital touchpoint. Taking command of your technical stack is the most direct path to dominating competitive SERPs.

Key Takeaways

- Implement Server-Side Rendering (SSR) or Static Site Generation (SSG) to eliminate JavaScript crawling hurdles and ensure search bots index metadata and content in a single pass.

- Architect content models that treat SEO metadata and structured data as first-class citizens, allowing for granular control and automated schema injection across all digital touchpoints.

- Optimize Core Web Vitals by utilizing edge delivery and global Content Delivery Networks to reduce latency and keep Largest Contentful Paint (LCP) well below the 2.5-second threshold.

- Centralize SEO authority within the headless CMS to manage omnichannel consistency, automated hreflang logic, and canonical signals from a single source of truth.

Solving JavaScript Crawling With Server Side Rendering

One of the most significant technical hurdles in a headless architecture involves how search engine bots interpret JavaScript-heavy frontends. While Googlebot has become more adept at rendering client-side applications, the process is often delayed and resource-intensive, leading to a two-wave indexing process that can leave your latest content invisible for days. By implementing Server-Side Rendering (SSR), you ensure that the server processes the API data and returns a fully populated HTML document to the crawler immediately. This eliminates the execution gap and ensures that your metadata, headers, and body content are indexed in a single pass.

Static Site Generation (SSG) offers an alternative approach by pre-building every page of your site into static files during the deployment phase. This method provides a performance boost for SEO because it removes the need for real-time data fetching when a user or bot visits the URL. Since the content is already present in the source code, search engines can crawl your site with maximum efficiency and minimal load on your infrastructure. Whether you choose SSR for dynamic content or SSG for speed, both methods solve the fundamental visibility issues inherent in decoupled environments.

Bridging the gap between modern web development and search visibility requires a proactive stance on how your frontend framework communicates with the backend. Marketing managers and developers must work together to ensure that the initial HTML payload contains all the necessary structured data and canonical tags required for ranking. By moving the rendering process away from the user browser and onto the server or build server, you satisfy both the technical requirements of Core Web Vitals and the content requirements of search algorithms. This architectural choice transforms a potential crawling liability into a competitive advantage that traditional CMS platforms struggle to match.

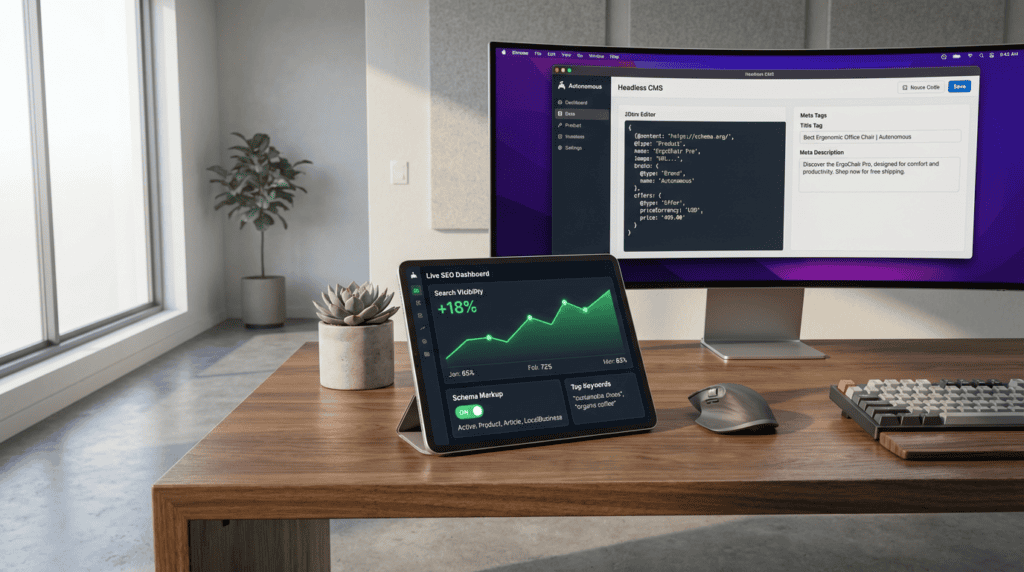

Architecting Structured Content for Schema and Metadata

Building a high-performing headless site requires a shift from page-based thinking to a structured content mindset. Instead of burying SEO elements within a rich text editor, developers must architect content models that treat metadata as first-class citizens. This involves creating dedicated API fields for canonical tags, meta titles, and descriptions that marketers can update without touching the codebase. By decoupling these elements, you ensure that SEO data remains consistent across every frontend consumer, from web browsers to mobile applications. This level of granular control allows for an agile marketing strategy that adapts to search engine algorithm changes in real time.

Effective schema implementation in a headless environment relies on the ability to map structured content fields directly to JSON-LD scripts. Rather than relying on rigid plugins, developers can build flexible content types that capture specific data points like product prices, review ratings, or event dates. These fields are then fetched via API and injected into the page head during server-side rendering or static generation. This process ensures that search engine crawlers receive rich, structured data that is perfectly synchronized with the visible on-page content. By automating the generation of schema through these predefined models, you reduce the risk of manual tagging errors and improve your chances of earning rich snippets.

Beyond basic tags, a robust content model must account for accessibility and performance through specialized media fields. Marketing teams should have direct access to manage alt text, image dimensions, and focal points within the CMS to optimize for Core Web Vitals and image search. Well-structured models also include fields for social sharing cards and open graph data to ensure brand consistency across platforms. When these technical requirements are integrated into the content entry workflow, the gap between development and marketing narrows significantly. This collaborative architecture results in a site that is not only fast and modern but also fully optimized for the complexities of modern search discovery.

Optimizing Core Web Vitals Through Edge Delivery

Headless architectures transform how content reaches the end user by utilizing global Content Delivery Networks to serve assets from the network edge. Unlike monolithic platforms that often struggle with server response times due to heavy database queries, a decoupled setup allows for pre-rendered pages to be cached geographically closer to the visitor. This strategic placement drastically reduces Time to First Byte and improves Core Web Vitals scores by eliminating the latency inherent in traditional hosting environments. By offloading the heavy lifting to the edge, marketing teams can ensure that their high-quality content loads almost instantaneously regardless of the user location.

Modern headless frameworks further enhance Core Web Vitals by implementing automated image optimization and smart resource prioritization at the delivery layer. These systems dynamically resize and compress images into next-generation formats like WebP or AVIF before they even reach the browser, which is essential for maintaining a low Cumulative Layout Shift score. Developers can also leverage fine-grained control over script execution to prevent render-blocking elements from stalling the initial page load. This level of technical precision ensures that the visual stability of the site remains intact, providing a seamless experience that search engines reward with higher rankings.

The shift toward edge delivery also addresses the evolving demands of Interaction to Next Paint by reducing the main thread workload on the client side. Because the frontend is decoupled from the backend CMS, the browser spends less time processing complex legacy code and more time responding to user inputs. This architectural efficiency allows for a highly responsive interface that feels fluid and intuitive, even on low-powered mobile devices. By merging advanced web architecture with SEO best practices, businesses can overcome the performance bottlenecks that frequently plague traditional content management systems.

Managing Omnichannel SEO and Hreflang Logic

Maintaining search engine authority across a decoupled architecture requires a centralized approach to managing metadata and canonical signals. Since content is distributed to various frontends like mobile apps, web browsers, and IoT devices, you must ensure that your headless CMS acts as the single source of truth for SEO attributes. By defining global canonical rules within the CMS schema, you prevent duplicate content issues that often arise when the same API response is rendered on multiple subdomains or platforms. This strategy ensures that search engines recognize the primary version of your content regardless of how many different frontends are consuming the data. Developers should prioritize consistent URL structures and schema markup to provide clear signals to crawlers across every touchpoint.

International SEO adds another layer of complexity that is best managed through automated logic within your headless environment. Implementing hreflang tags manually across thousands of pages is prone to error, so you should leverage the CMS to map relationships between localized versions of content automatically. When a new language variant is published, the system should generate the necessary cross-references and inject them into the head of each frontend via the API. This automation ensures that Google serves the correct regional version to users while maintaining a clean link equity profile across your global domains. Using server-side rendering or static site generation allows these tags to be crawled effectively without relying on client-side execution.

Achieving omnichannel success also depends on how well you synchronize structured data and social signals across your digital ecosystem. A robust headless strategy involves creating a shared library of JSON-LD templates that the CMS populates dynamically for every delivery channel. This ensures that rich snippets remain consistent whether a user finds your content through a traditional search engine or an AI-powered discovery tool. By decoupling the presentation layer, you gain the agility to update SEO configurations globally without redeploying multiple codebases. This centralized control reduces technical debt and allows marketing teams to respond quickly to algorithm changes while maintaining high performance standards for Core Web Vitals.

Maximizing Search Performance with Headless Architectures

Mastering headless CMS SEO strategies provides a decisive competitive edge by merging elite site performance with granular data control. While traditional platforms often struggle with bloated code and slow server response times, a decoupled architecture allows for fast page speeds and superior Core Web Vitals. By leveraging server-side rendering and edge caching, businesses can ensure their content is instantly discoverable by search engine crawlers without the common pitfalls of client-side JavaScript. This technical foundation not only boosts organic rankings but also provides a resilient framework that scales across various digital touchpoints and AI-driven search interfaces.

Bridging the gap between developer flexibility and marketing autonomy requires a deliberate roadmap focused on integrated tooling. Teams should prioritize building custom SEO fields within the CMS interface so that marketers can manage metadata, canonical tags, and structured data without touching the codebase. This approach empowers content creators to react to market trends in real time while developers maintain the integrity of the high-performance frontend. Success depends on establishing clear workflows where technical architecture supports, rather than hinders, the strategic goals of the SEO team.

As search technology evolves, the adaptability of a headless strategy ensures your brand remains ahead of the curve. The ability to push structured content through APIs means your site is prepared for voice search, smart devices, and evolving schema requirements. By investing in a well-executed decoupled environment, you eliminate the technical debt associated with legacy systems and create a future-proof digital presence. Ultimately, the synergy between developer innovation and marketing agility defines the next generation of search engine dominance.

Frequently Asked Questions

1. How does a headless CMS improve my search engine rankings?

A headless CMS removes the technical bloat associated with traditional platforms, allowing you to optimize Core Web Vitals and site speed more effectively. By decoupling the frontend, you gain total control over how search bots interact with your data and ensure your site meets the highest performance standards.

2. Why is Server Side Rendering (SSR) necessary for SEO?

SSR ensures that search engine crawlers receive a fully populated HTML document immediately rather than waiting for JavaScript to execute. This eliminates the two-wave indexing delay and guarantees that your metadata and content are indexed in a single, efficient pass.

3. What is the difference between SSR and Static Site Generation (SSG) for SEO?

SSR processes data on the server for every request, which is ideal for dynamic content, while SSG pre-builds pages into static files during deployment for maximum speed. Both methods solve the common JavaScript crawling hurdles that often plague client-side applications.

4. Can I achieve a fast Largest Contentful Paint (LCP) with a headless architecture?

Yes, utilizing a headless stack allows you to implement edge caching and optimized rendering paths to keep your LCP well under the 2.5-second threshold. This level of technical precision is a critical ranking factor in today’s performance-driven search environment.

5. Does a headless CMS handle structured data better than WordPress?

A headless architecture provides the flexibility to inject custom structured data and schema markup directly into your frontend code without platform limitations. This allows you to communicate more effectively with AI-driven search engines and dominate competitive search results.

6. How do I ensure my JavaScript content is indexed quickly?

The most effective way to ensure rapid indexing is to avoid relying on client-side rendering for your primary content. By moving the rendering process to the server or pre-building pages, you provide crawlers with instant access to your headers, body text, and metadata.